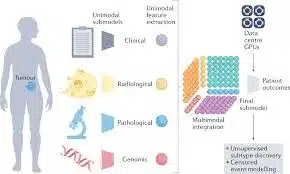

The traditional single-modal data approaches often miss important insights that are present in cross-modal relations. Multi-Modal Analysis brings together diverse sources of data, such as text, images, audio, and more similar data to provide a more complete view of an issue. This multi-modal data analysis is called multi-modal data analytics, and it improves prediction accuracy by providing a more complete understanding of the issues at hand while helping to uncover complicated relations found across the modalities of data.

Due to the ever-growing popularity of multimodal machine learning, it is essential that we analyze structured and unstructured data together to make our accuracy better. This article will explore what is multi-modal data analysis and the important concepts and workflows for multi-modal analysis.

Understanding Multi-Modal Data

Multimodal data means the data that combines information from two or more different sources or modalities. This could be a combination of text, image, sound, video, numbers, and sensor data. For example, a post on social media, which could be a combination of text and images, or a medical record that contains notes written by clinicians, x-rays, and measurements of vital signs, is multimodal data.

The analysis of multimodal data calls for specialized methods that are able to implicitly model the interdependence of different types of data. The essential point in modern AI systems is to analyze ideas regarding fusion that can have a richer understanding and prediction power than single-modality-based approaches. This is particularly important for autonomous driving, healthcare diagnosis, recommender systems, etc.

What is Multi‑Modal Data Analysis?

Multimodal data analysis is a set of analytical methods and techniques to explore and interpret datasets, including multiple types of representations. Basically, it refers to the use of specific analytical methods to handle different data types like text, image, audio, video, and numerical data to find and discover the hidden patterns or relationships between the modalities. This allows a more complete understanding or provides a better description than a separate analysis of different source types.

The main difficulty lies in designing techniques that allow for an efficient fusion and alignment of information from multiple modalities. Analysts must work with all types of data, structures, scales, and formats to surface meaning in data and to recognize patterns and relationships throughout the business. In recent years, advances in machine learning techniques, especially deep learning models, have transformed the multi-modal analysis capabilities. Approaches such as attention mechanisms and transformer models can learn detailed cross-modal relationships.

Data Preprocessing and Representation

To analyze multimodal data effectively, the data should first be converted into numerical representations that are compatible and that retain key information but can also be compared across modalities. This pre-processing step is essential for good fusion and the analysis of the heterogeneous sources of data.

Feature Extraction

Feature extraction is the transformation of the raw data into a set of meaningful features. These can then be utilized by machine learning and deep learning models in a good and efficient way. It is meant to extract and identify the most important characteristics or patterns from the data, to make the tasks of the model simpler. Some of the most widely used feature extraction methods are:

- Text: It is regarding converting the words into numbers (ie, vectors). This can be done with TF-IDF if the number of words is smaller, and embeddings like BERT or openai for semantic relationship capture.

- Images: It can be done using pre-trained CNN networks like ResNet or VGG activations. These algorithms can capture the hierarchical patterns from low-level edges in the image to the high-level semantic concepts.

- Audio: Computing audio signals with the help of spectrograms or Mel-frequency cepstral coefficients(MFCC). These transformations convert the temporal audio signals from time domain into frequency domain. This helps in highlighting the most important parts.

- Time-series: Using Fourier or wavelength transformation to change the temporal signals into frequency components. These transformations help in uncovering patterns, periodicities, and temporal relationships within sequential data.

Every single modality has its own intrinsic nature and thus asks for modality-specific techniques for coping with its specific characteristics. Text processing includes tokenizing and semantically embedding, and image analysis uses convolutions for finding visual patterns. Frequency domain representations are generated from audio signals, and temporal information is mathematically reinterpreted to unveil trace patterns and periods.

Representational Models

Representational models help in creating frameworks for encoding multi-modal information into mathematical structures, this enables cross-modal analysis and further in-depth understanding of the data. This can be done using:

- Shared Embeddings: Creates a common latent space for all the modalities in one representational space. One can compare, combine different types of data directly in the same vector space with the help of this approach.

- Canonical Analysis: Canonical Analysis helps in identifying the linear projections with highest correlation across modalities. This statistical test identifies the best correlated dimensions across various data types, thereby allowing cross-modal comprehension.

- Graph-Based Methods: Represent every modality as a graph structure and learn the similarity-preserving embeddings. These methods represent complex relational patterns and allow for network-based analysis of multi-modal relations.

- Diffusion maps: Multi-view diffusion combines intrinsic geometric structure and cross-relations to conduct dimension reduction across modalities. It preserves local neighborhood structures but enables dimension reduction in the high-dimensional multi-modal data.

These models build unified structures in which different kinds of data might be compared and meaningfully composed. The goal is the generation of semantic equivalence across modalities to enable systems to understand that an image of a dog, the word “dog,” and a barking sound all refer to the same thing, although in different forms.

Fusion Techniques

In this section, we’ll delve into the primary methodologies for combining the multi-modal data. Explore the early, late, and intermediate fusion strategies with their optimal use cases from different analytical scenarios.

1. Early Fusion Strategy

Early fusion combines all data from different sources and different types together at feature level before the processing begins. This allows the algorithms to find the hidden complex relationships between different modalities naturally.

These algorithms excel especially when modalities share common patterns and relations. This helps in concatenating features from various sources into combined representations. This method requires careful handling of data into different data scales and formats for proper functioning.

2. Late Fusion Methodology

Late fusion is doing just opposite of Early fusion, instead of combining all the data sources combinely it processes all the modalities independently and then combines them just before the model makes decisions. So, the final predictions come from the individual modal outputs.

These algorithms work well when the modalities provide additional information about the target variables. So, one can leverage existing single-modal models without significant changes in architectural changes. This method offers flexibility in handling missing modalities’ values during testing phases.

3. Intermediate Fusion Approaches

Intermediate fusion strategies combine modalities at various processing levels, depending on the prediction task. These algorithms balance the benefits of both the early and late fusion algorithms. So, the models can learn both individual and cross-modal interactions effectively.

These algorithms excel in adapting to the specific analytical requirements and data characteristics. So they are extremely well at optimizing the fusion-based metrics and computational constraints, and this flexibility makes it suitable for solving complex real-world applications.

Sample End‑to‑End Workflow

In this section, we’ll walk through a sample SQL workflow that builds a multimodal retrieval system and try to perform semantic search within BigQuery. So we’ll consider that our multimodal data consists of only text and images here.

Step 1: Create Object Table

So first, define an external “Object table:- images_obj” that references unstructured files from the cloud storage. This enables BigQuery to treat the files as queryable data via an ObjectRef column.

CREATE OR REPLACE EXTERNAL TABLE dataset.images_obj

WITH CONNECTION `project.region.myconn`

OPTIONS (

object_metadata = 'SIMPLE',

uris = ['gs://bucket/images/*']

);Here, the table image_obj automatically gets a ref column linking each row to a GCS object. This allows BigQuery to manage unstructured files like images and audio files along with the structured data. While preserving the metadata and access control.

Step 2: Reference in Structured Table

Here we are combining the structured rows with ObjectRefs for multimodal integrations. So we group our object table by producing the attributes and generating an array of ObjectRef structs as image_refs.

CREATE OR REPLACE TABLE dataset.products AS

SELECT

id, name, price,

ARRAY_AGG(

STRUCT(uri, version, authorizer, details)

) AS image_refs

FROM images_obj

GROUP BY id, name, price;This step creates a product table with structured fields along with the linked image references, enabling the multimodal embeddings in a single row.

Step 3: Generate Embeddings

Now, we’ll use BigQuery to generate text and image embeddings in a shared semantic space.

CREATE TABLE dataset.product_embeds AS

SELECT

id,

ML.GENERATE_EMBEDDING(

MODEL `project.region.multimodal_embedding_model`,

TABLE (

SELECT

name AS uri,

'text/plain' AS content_type

)

).ml_generate_embedding_result AS text_emb,

ML.GENERATE_EMBEDDING(

MODEL `project.region.multimodal_embedding_model`,

TABLE (

SELECT

image_refs[OFFSET(0)].uri AS uri,

'image/jpeg' AS content_type

FROM dataset.products

)

).ml_generate_embedding_result AS img_emb

FROM dataset.products;Here, we’ll generate two embeddings per product. One from the respective product name and the other from the first image. Both use the same multimodal embedding model ensuring this is to ensure that both embeddings share the same embedding space. This helps in aligning the embeddings and allows the seamless cross-modal similarities.

Step 4: Semantic Retrieval

Now, once we the the cross-modal embeddings. Querying them using a semantic similarity will give matching text and image queries.

SELECT id, name

FROM dataset.product_embeds

WHERE VECTOR_SEARCH(

ml_generate_embedding_result,

(SELECT ml_generate_embedding_result

FROM ML.GENERATE_EMBEDDING(

MODEL `project.region.multimodal_embedding_model`,

TABLE (

SELECT "eco‑friendly mug" AS uri,

'text/plain' AS content_type

)

)

),

top_k => 10

)

ORDER BY COSINE_SIM(img_emb,

(SELECT ml_generate_embedding_result FROM

ML.GENERATE_EMBEDDING(

MODEL `project.region.multimodal_embedding_model`,

TABLE (

SELECT "gs://user/query.jpg" AS uri,

'image/jpeg' AS content_type

)

)

)

) DESC;This SQL query here performs a two-stage search. First text-to-text-based semantic search to filter candidates, then orders them by image-to-image similarity between the product and images and the query. This helps in increasing the search capabilities so you can input a phrase and an image, and retrieve semantically matching products.

Benefits of Multi‑Modal Data Analytics

Multi-modal data analytics is changing the way organizations get value from the variety of data available by integrating multiple data types into a unified analytical structures. The value of this approach derives from the combination of the strengths of different modalities that when considered separately will provide less effective insights than the existing standard ways of multi-modal analysing:

Deeper Insights: Multimodal integration uncovers the complex relationships and interactions missed by the single-modal analysis. By exploring correlations among different data types (text, image, audio, and numeric data) at the same time it identifies hidden patterns and dependencies and develops a profound understanding of the phenomenon being explored.

Increased performance: Multimodal models show more enhanced accuracy than a single-modal approach. This redundancy builds strong analytical systems that produce similar and accurate results even if one or modal has some noise in the data such as missing entries and incomplete entries.

Faster time-to-insights: The SQL fusion capabilities increase the effectiveness and speed of prototyping and analytics workflows since they support providing insight from even rapid access to rapidly available data sources. This type of activity encourages all types of new opportunities for intelligent automation and user experience.

Scalability: It uses the native cloud capability for SQL and Python frameworks, enabling the process to minimize reproduction problems while also hastening the deployment methodology. This methodology specifically indicates that the analytical solutions can be scaled properly despite level raised.

Conclusion

Multi-modal data analysis shows revolutionary approach that can unlock unmatched insights by using diverse information sources. Organizations are adopting these methodologies to gain significant competitive advantages through a comprehensive understanding of complex relations that single-modal approaches didn’t able to capture.

However, success requires strategic investment and appropriate infrastructure with robust governance frameworks. As automated tools and cloud platforms continue to give easy access, the early adopters can make everlasting advantages in the field of a data-driven economy. Multimodal analytics is rapidly becoming important to succeed with complex data.