Google crawling is a vital aspect of SEO and if bots can’t crawl your website successfully. This is the leading cause of why a lot of important pages are not indexed in a number of search engines including Google. A site with proper navigation, aids in indexing and deep crawling. To enhance proper navigation and solve various other problems, here are 8 Solid Tips to Increase Google Crawl rate of your website!

1. Update your content on a daily basis: Content is by far the most important criteria for search engines. Sites that update their content on a regular basis are more likely to get crawled more frequently.

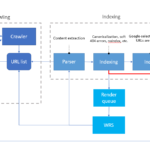

2. Create sitemaps: Submitting sitemaps is one of the very first small things which you have to discover fast by search engine bots.

3. Do not use duplicated content: Copied content may reduce crawl rates. Search engines can quickly pick up on duplicated content thus, leading to a drop in your crawl rate. This can result in your site being ranked lower than usual or even being banned. You must offer unique and fresh content consistently.

4. Optimize and monitor your Google crawl rate: You can also optimize and monitor Google Crawl rate using tools such as Google Webmaster. Just go to the crawl stats and analyze. You can do it yourself. Set your Google crawl rate and increase it to faster rates.

5. Optimize the image: Crawlers are incapable of reading images directly. If you use images, be make sure to use of alt tags to offer a description that search engines can index. Images are seen in search results but only if they are correctly optimized.

6. Link your blog post: Interlinking can help you to pass the link to your site and search engine bots to crawl deep pages of your site. While working on new content, be sure to go back to the correlated old posts and add a link to the new post.

7. Make use of ping services: Pinging is an amazing way to show your presence on your website and let bots know when your site content is updated. There are a lot of manual ping services like Pingomatic.

8. Block access to the page that is not needed: You can block crawling useless pages like back-end folders, admin pages etc as they are not indexed by Google.